OD-VOS: Object Detection for Video Object Segmentation

Abstract

Long-term video object segmentation (VOS) often requires manual intervention for selecting object masks, which can be impractical for various applications in robotics. In our project, termed OD-VOS, we propose a novel approach that integrates Vision Transformer for Open-World Localization (OWL-ViT) with XMem. OWL-ViT is an object detection network that can identify objects based on text queries, and XMem is a VOS framework which efficiently stores memory inspired by the Atkinson-Shiffrin memory model. OD-VOS uses OWL-ViT to automate the mask selection process for XMem. Our method eliminates the need for manual mask selection, thus streamlining the segmentation pipeline and reducing human effort. By automating the mask selection process, our framework enhances the applicability of VOS techniques.

Demo

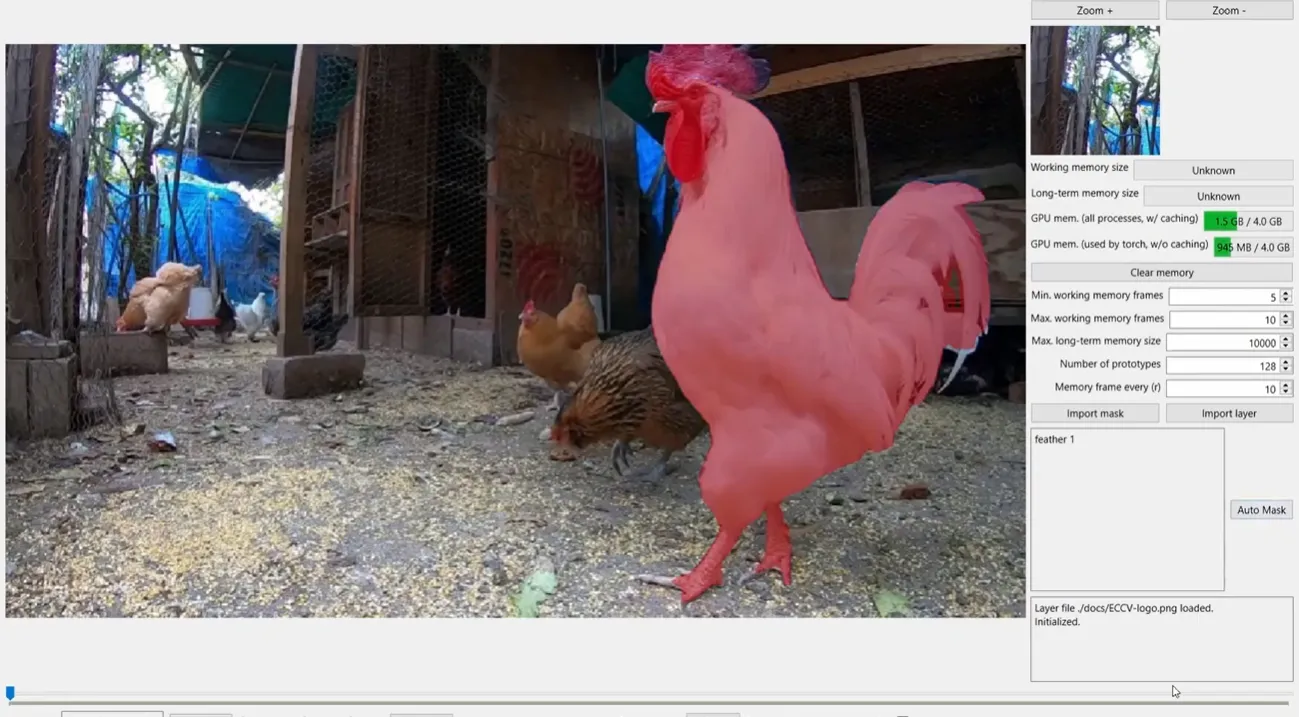

Example with 1 object:

Example with 2 objects:

Project Video

Citation

If you found our work helpful, consider citing us with the following BibTeX reference:

@article{chan2024deeprob,

title = {OD-VOS: Object Detection Video Object Segmentation},

author = {Benharush, Omer and Chan, Sarah and Kernan, Jack and Rucker, Max},

year = {2024}

}

Contact

If you have any questions, feel free to contact Sarah Chan.